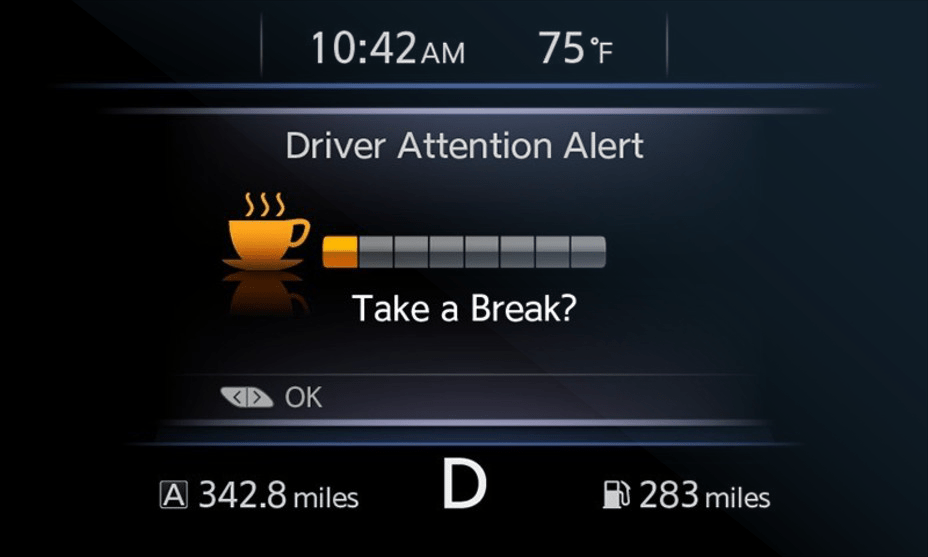

On a recent trip, I was driving a rented car when an incessant beeping and light appeared on the dashboard. I glanced down, expecting to see an alert about the need for an oil change or to check the tire pressure, only to see a mysterious coffee cup icon and “Driver Attention Level Low. Take a break.” I tried ignoring it and kept driving. My attention was fine, thank you very much. I had only been driving for about 40 minutes, it was morning, and as a morning person, I felt alert and ready to take on the world. The car continued to beep and flash the alert. I talked back to the car. “You’re wrong! I don’t need a break. My eyes are on the road and I’m good.” The car continued to beep and flash the alert, and the steering wheel soon began to vibrate. Now, I decided to pull over. And I was perplexed. What algorithm was at work here?!

A call to the rental car company helped me get to the bottom of this mystery. A previous driver had reported the same issue. They had assumed it was a one-off glitch. They had checked and thought the problem had been resolved. Now that they had a second report, they would take this car out of service and gladly exchange it for another vehicle. I was given a choice of vehicles. A short time later, I was cruising down the road in, well, — a `less smart’ car.

Predictive algorithms are everywhere and are changing the way we make decisions. Ride sharing services, such as Uber, match drivers to passengers, and job recruiting sites screen resumes and select candidates for interviews. In healthcare, predictive and diagnostic algorithms can detect subtle signs of cancerous lesions in the lungs, breast, kidneys, and other organs; predict which patients will progress (for example, which diabetic patients will develop diabetic retinopathy or kidney disease), or predict which patients are more or less likely to stick to a specific treatment plan (for example, self-injectables in Crohn’s disease).

Many people appear to have a love-hate relationship with wearables, sensors, scanners, and the algorithms that enable them. We appreciate their capabilities and how they provide us with real-time data that allows us and our healthcare providers to improve our health. Algorithms are increasingly demonstrating superior diagnostic acumen and even outperforming clinicians in certain situations due to its ability to quickly and efficiently comb through large amounts of data. But we are wary of anything that appears to decide for us. We are especially resistant to anything that appears to make a decision with social consequences or that involves preferences.

Research shows that consumers and patients are hesitant to participate in healthcare decisions that are guided by AI. Why? In a nutshell, trust.

In a fascinating series of studies, Chiara Longoni and her colleagues at Boston University explored how receptive people are to AI application in health care.

They discovered that there are several reasons why people are hesitant to use AI. Among these are:

- A belief that AI-enabled algorithms are incapable of understanding and responding to the unique needs of people (also known as uniqueness neglect).

- A conviction that, despite evidence to the contrary, people comprehend human medical decision-making intuitively.

- Inability to understand or explain how AI works and makes decisions, perceiving it as a “black box” (often referred to among AI researchers as the explainability problem).

Four critical principles have been proposed in response to these concerns:

Fairness – AI-driven systems must be fair to all patients and groups, so training data sets must be free of bias. One common criticism leveled at algorithms is that they are prone to biases introduced by human programmers and societal bias. Much has been written about algorithm bias.

Transparency – Transparency is essential when it comes to the development, implementation, and maintenance of all AI-enabled systems and algorithms.

Robustness – Security, privacy, and quality controls, as well as safety, are critical in AI-enabled systems.

Explainability – AI-enabled algorithms and systems’ decisions must be understandable to users, including healthcare professionals and patients. Explainability is a rapidly growing field of AI research.

These four principles are critical to remember as we move forward with the development, deployment, and implementation of algorithms and other AI-enabled technologies aimed at enhancing medical care.

As a society, we are right to be concerned about AI and its use in making decisions in healthcare or in other aspects of daily life.

But first, we must pay attention to our human decision-making.

We make decisions all the time, big or small, without really thinking about how we arrive at them or acknowledging any biases we might have. Let’s take a moment to consider driving skills. Do you consider yourself an average driver or would you immediately say you are above average when asked? Surveys have repeatedly shown that around 90% of people believe they are above-average drivers. That can’t be true statistically, as only 50% can be above average.

We rarely pause to consider if our problem-solving approaches are the best fit for the current situation. We are all susceptible to cognitive biases (a topic for another day), but few can name more than a handful of biases. Much of the time, our attention to our own decisions and importantly, how we make decisions is low.

How can we create better healthcare environments in which we use AI to augment our own intelligence and make better decisions? There are two extremes we must avoid. The first is that we rely so heavily on our own judgment that we rarely use AI-enabled algorithms and tools in healthcare, depriving ourselves of their benefits. The second risk is that we will become so accustomed to and reliant on the inputs and outputs of AI-enabled algorithms and tools that we will lose sight of common sense. The real challenge in debates about AI-driven tools in healthcare, ironically, is a human one: to make smarter decisions in the digital health era, we cannot allow our attention to decisions—both human and AI-assisted—to ever become low.